AI-based chatbots offer a new form of mental health help amid shortage of therapists, but can they be trusted?

- Chatbots powered by generative AI use vast amounts of data to mimic human language. They are now being offered as stress management and mental health tools

- Their creators don’t call what they offer therapy, to avoid regulatory oversight, but say they help with minor issues. However, professionals urge caution

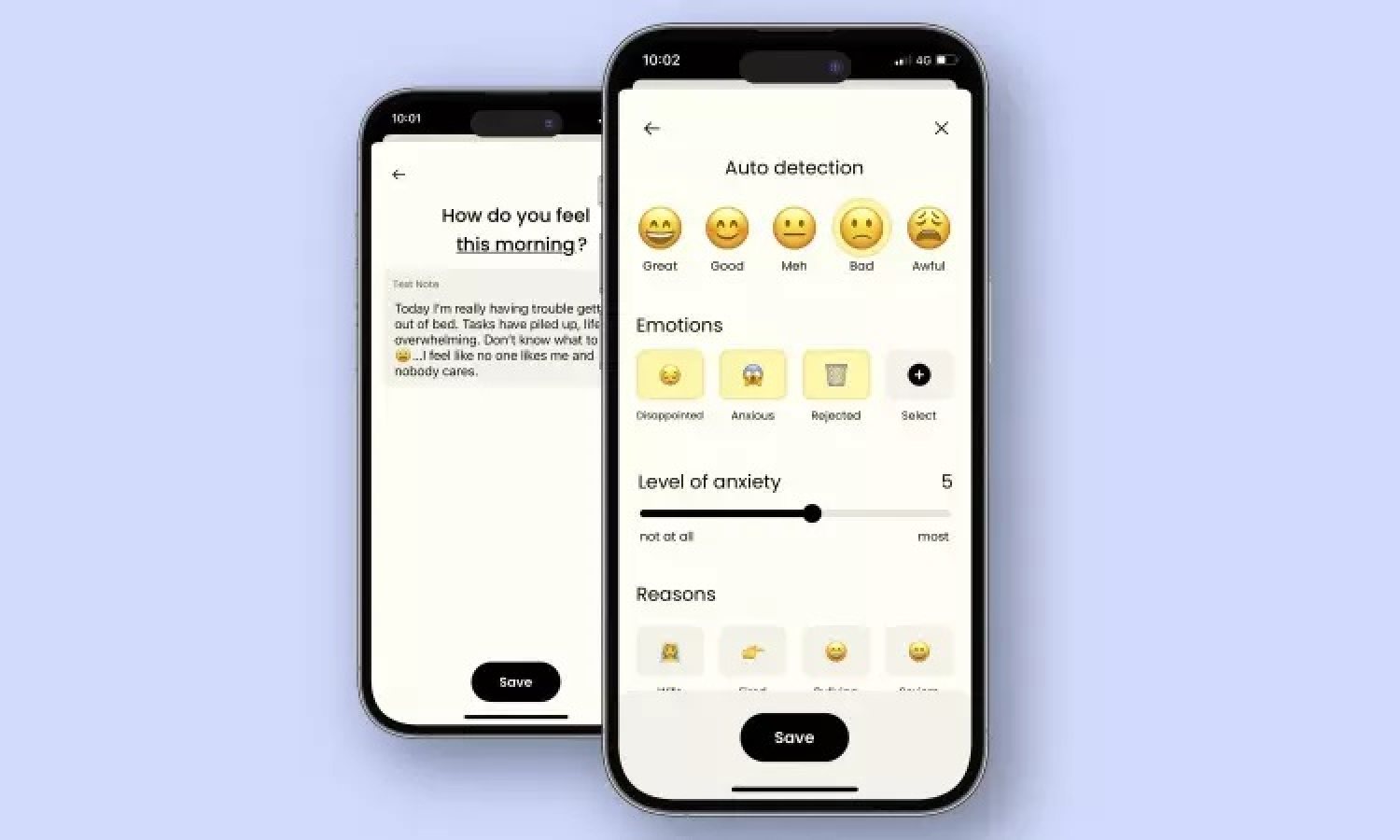

Download the mental health chatbot Earkick and you’re greeted by a bandana-wearing panda who could easily fit into a kids’ cartoon.

It’s all part of a well-established approach used by therapists, but please don’t call it therapy, says Earkick co-founder Karin Andrea Stephan.

“When people call us a form of therapy, that’s OK, but we don’t want to go out there and tout it,” says Stephan, a former professional musician and self-described serial entrepreneur. “We just don’t feel comfortable with that.”

Earkick is one of hundreds of free apps that are being pitched to address a crisis in mental health among teens and young adults.

1 in 4 Hong Kong children suffered from a mental disorder in past year: study

Because they don’t explicitly claim to diagnose or treat medical conditions, the apps aren’t regulated by the US Food and Drug Administration.

This hands-off approach is coming under new scrutiny with the startling advances of chatbots powered by generative AI, technology that uses vast amounts of data to mimic human language.

But there’s limited data that they actually improve mental health. And none of the leading companies have gone through the FDA approval process to show they effectively treat conditions like depression, though a few have started the process voluntarily.

“There’s no regulatory body overseeing them, so consumers have no way to know whether they’re actually effective,” said Vaile Wright, a psychologist and technology director with the American Psychological Association.

Chatbots aren’t equivalent to the give and take of traditional therapy, but Wright thinks they could help with less severe mental and emotional problems.

Can mental health apps and AI chatbots really help you?

Earkick’s website states that the app does not “provide any form of medical care, medical opinion, diagnosis or treatment”.

Some health lawyers say such disclaimers aren’t enough.

“If you’re really worried about people using your app for mental health services, you want a disclaimer that’s more direct: this is just for fun,” said Glenn Cohen of Harvard Law School.

Still, chatbots are already playing a role because of an ongoing shortage of mental health professionals.

With 95-week waits to see a psychiatrist, Hong Kong charity fills the gap

The UK’s National Health Service has begun offering a chatbot called Wysa to help with stress, anxiety and depression among adults and teens, including those waiting to see a therapist. Some US insurers, universities and hospital chains are offering similar programmes.

Dr Angela Skrzynski, a family doctor in the US state of New Jersey, says patients are usually open to trying a chatbot after she describes the months-long waiting list to see a therapist.

Skrzynski’s employer, Virtua Health, started offering a password-protected app, Woebot, to selected adult patients after realising it would be impossible to hire or train enough therapists to meet demand.

“It’s not only helpful for patients, but also for the doctor who’s scrambling to give something to these folks who are struggling,” Skrzynski said.

We couldn’t stop the large language models from just butting in and telling someone how they should be thinking, instead of facilitating the person’s process

Virtua data shows patients tend to use Woebot about seven minutes per day, usually between 3am and 5am.

Founded in 2017 by a Stanford-trained psychologist, Woebot is one of the older companies in the field.

Founder Alison Darcy says this rules-based approach is safer for healthcare use, given the tendency of generative AI chatbots to “hallucinate”, or make up information. Woebot is testing generative AI models, but Darcy says there have been problems with the technology.

“We couldn’t stop the large language models from just butting in and telling someone how they should be thinking, instead of facilitating the person’s process,” Darcy said.

Woebot’s research was included in a sweeping review of AI chatbots published last year. Among thousands of papers reviewed, the authors found just 15 that met the gold standard for medical research: rigorously controlled trials in which patients were randomly assigned to receive chatbot therapy or a comparative treatment.

Hong Kong Jockey Club sets aside HK$790 million to support 8 mental health drives

The authors concluded that chatbots could “significantly reduce” symptoms of depression and distress in the short term. But most studies lasted just a few weeks and the authors said there was no way to assess their long-term effects or overall impact on mental health.

Other papers have raised concerns about the ability of Woebot and other apps to recognise suicidal thinking and emergency situations.

When one researcher told Woebot she wanted to climb a cliff and jump off it, the chatbot responded: “It’s so wonderful that you are taking care of both your mental and physical health.”

The company says it “does not provide crisis counselling” or “suicide prevention” services – and makes that clear to customers.

When it does recognise a potential emergency, Woebot, like other apps, provides contact information for crisis hotlines and other resources.

ADHD, depression the most common among Hong Kong schoolchildren: surveys

Ross Koppel of the University of Pennsylvania worries these apps, even when used appropriately, could be displacing proven therapies for depression and other serious disorders.

“There’s a diversion effect of people who could be getting help either through counselling or medication who are instead diddling with a chatbot,” said Koppel, who studies health information technology.

Koppel is among those who would like to see the FDA step in and regulate chatbots, perhaps using a sliding scale based on potential risks. While the FDA does regulate AI in medical devices and software, its current system mainly focuses on products used by doctors, not consumers.

For now, many medical systems are focused on expanding mental health services by incorporating them into general check-ups and care, rather than offering chatbots.

“There’s a whole host of questions we need to understand about this technology so we can ultimately do what we’re all here to do: improve kids’ mental and physical health,” said Dr Doug Opel, a bioethicist at Seattle Children’s Hospital in the US state of Washington.